In the last months, the democratization of generative AI usage has been phenomenal. The most known generative AI tool, ChatGPT, has already gathered a pool of users well above 100 million, a growth in users that not even the biggest and most successful companies have reached. Improvements and new usage are found daily, and new generative AI tools are released by dozens each month.

People and pundits are talking about a general-purpose technology revolution. Time will tell, but one sure thing is that cybersecurity is a well-designated candidate to benefit from the generative AI revolution. Indeed, generative AI in cybersecurity can bring lots of benefits and answers to the challenges cybersecurity is facing.

The growing complexity of cybersecurity threats calls for innovative solutions, and integrating generative AI in cybersecurity can significantly enhance security operations. Utilizing generative AI can help automate tasks, reduce noise, and prioritize threats, allowing organizations to combat the ever-evolving cyber threat landscape effectively. Combined with orchestration and automation capabilities, this has the potential for significant disruption. We already talked a lot about these challenges, but we will assess them today under the generative AI scope.

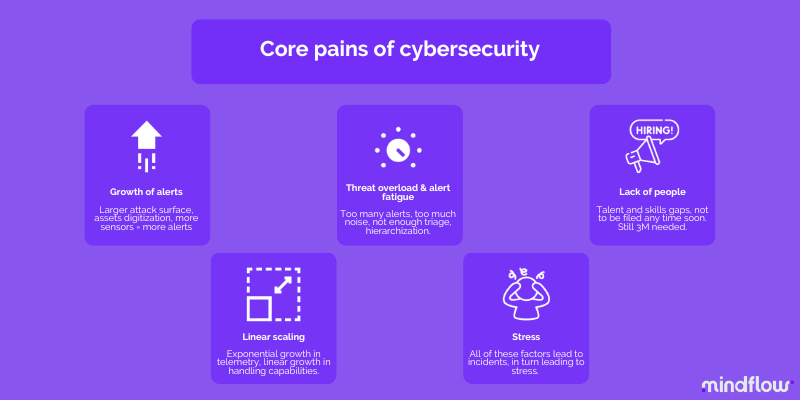

1. Harsh reminder about cybersecurity pains

Growth of alerts.

As the threat landscape evolves and organizations increasingly rely on digital systems, the number of alerts generated by security tools has grown exponentially. However, even though the noise issue is real, as we said in former articles, this last year saw a dramatic increase in attacks. Hence, these alerts range from false positives to genuine threats, making it challenging for security teams to stay on top of all the notifications.

Threat overload and alert fatigue.

With the ever-growing volume of alerts, it becomes difficult to distinguish between critical issues that warrant immediate attention and lower-priority concerns that can be addressed later. As security teams struggle to manage the overwhelming number of alerts, they may experience threat overload and alert fatigue.

Threat overload occurs when an organization has too many threats to track and mitigate effectively. In sum, there are insufficient hands to identify, quarantine, and remediate. In contrast, alert fatigue occurs when security personnel becomes desensitized to alerts due to their sheer volume.

Both threat overload and alert fatigue lead to missed threats, as overwhelmed security teams overlook incidents or fail to prioritize their response to potential breaches.

Lack of trained experts.

The rapid evolution of the cybersecurity landscape has resulted in a human and skills gap. The figure is known, the shortage of trained experts is around 3 million worldwide. It represents as many experts capable of addressing the myriad of threats organizations face today.

This lack of expertise is exacerbated by the high demand for skilled cybersecurity professionals, making it difficult for organizations to attract and retain top talent, favoring the happy few who can afford such talents. This can lead to a lack of institutional knowledge and experience, further hampering an organization’s ability to defend against cyber threats effectively.

Linear scaling of security operations.

Organizations grow, increasingly digitizing themselves, deploying assets on the cloud, and increasing their attack surface. As they evolve, their security needs become more complex because the number of assets and endpoints grows. As a result, telemetry rises exponentially.

In front of this exponential increase in telemetry, the workload associated with managing and defending these systems often scales linearly, inevitably increasing the burden on security teams that can only do so much.

This linear scaling of security operations can result in significant toil—manual, repetitive, and automatable tasks devoid of enduring value. Toil not only consumes valuable time and resources but also limits the ability of security professionals to focus on more strategic tasks, such as threat modeling, root cause analysis, or risk management, for instance.

Stress in security teams.

All these factors, as we already learned here, are creating an endless loop where the people in security teams are constantly being harassed by noise, overload, or inadequacy of tools and don’t have the means to get out of it. This state of affairs contributes to high-stress levels among personnel that cannot focus on their primary responsibilities, such as analyzing potential threats and developing effective countermeasures.

Furthermore, this harsh environment can lead to miscommunication and confusion among team members, potentially hampering their ability to work together effectively.

2. What AI Brings to the Table

Today or in the next 5 years, generative AI will bring some of the following benefits or maybe more. It depends on further progress in specific domains, such as reinforcement learning or casuistic reasoning.

Simplify complexity and reduce noise.

One of the most significant advantages of AI and ML technologies in cybersecurity is their ability to simplify complex data sets and find correlations between disparate data sets. Variational Autoencoders (VAE), by learning the underlying structure of data through unsupervised learning, are particularly adept at capturing complex data distributions and can generate new samples representative of the original data.

Hence, VAEs can model normal network behavior and detect anomalies by comparing new data points with the learned distribution. This complex data patterns modeling will enhance threat detection capabilities, particularly for identifying previously unknown or zero-day attacks.

By incorporating reinforcement learning, generative AI models can adapt and improve their performance based on environmental feedback. This capability will help AI models learn to identify and respond to new threats more effectively over time, thus remaining up-to-date with the evolving threat landscape.

Implement AI and ML to lighten the burden.

On top of reducing the noise, implementing AI and ML technologies can significantly lighten the burden on security teams by automating manual, repetitive tasks that contribute to toil. For example, AI-powered tools can automatically categorize alerts based on their severity and potential impact, allowing security professionals to focus on more strategic tasks, such as threat hunting and incident response.

Additionally, AI and ML technologies can help automate vulnerability management, enabling organizations to identify and remediate system weaknesses more efficiently.

Use of threat intelligence to identify and prioritize threats.

Threat intelligence is vital in modern cybersecurity operations, helping organizations identify and protect against the most critical threats. As we saw above, generative AI technologies can significantly enhance the effectiveness of threat intelligence by collecting, analyzing, and correlating vast amounts of data from various sources.

This process allows organizations to understand better the tactics, techniques, and procedures used by threat actors, enabling them to prioritize their defenses and allocate resources more effectively. Recent announcements from Google and Microsoft are to be closely monitored.

Organizations can focus on the most significant risks and vulnerabilities by leveraging AI-powered threat intelligence through reinforcement learning. This allows them to develop targeted strategies issued and selected by generative AI models before being approved by humans to protect their critical assets.

3. The challenges of generative AI in cybersecurity

Lack of skilled cybersecurity workers.

The cybersecurity pains loop, as we know, can repeat itself with AI. There aren’t enough cybersecurity workers with adequate skills to tackle the complex challenges of today’s threat landscape. Generative AI, for all its benefits, still needs skilled workers to feed them with the proper instructions even though it introduced incredible democratization in the complex field of cybersecurity.

Integrating AI and security effectively.

While AI and ML technologies offer significant potential for improving cybersecurity operations, integrating these tools effectively can challenge organizations. Successful integration requires a deep understanding of existing systems, processes, and security measures and the ability to adapt these elements to accommodate AI-driven solutions.

Speech and language analysis must be fine-tuned to assert and solve instructions from disparate inputs correctly, comply with different regulatory frameworks, ingest context, and provide consistent answers to context-based issues. Developments in reinforcement learning and bandit problem will provide significant benefits to AI by improving algorithms’ learning curves and increasing causality in reasoning. Also, particular fields of machine learning, such as sequential decision making under uncertainty problems, where the learning problem takes place in a closed-loop interaction between the learning agent and its environment, hence not passive but acting and learning its actions’ consequences on the environment.

Finally, some industries won’t be allowed, by choice or regulation, to rely on generative AI powered by large data sets. This is where the ability to make sense of restricted, limited data sets, such as a model confined to your network, to solve issues in a given environment will be essential.

All in all, cybersecurity is a critical matter. Errors can lead to dramatic consequences, and this is why generative AI in cybersecurity will have to come through an ultra-specialization to become standard in operations. Maybe one day, we will have unsupervised algorithms doing the work!

Potential negative impacts of AI on Cybersecurity.

As well as any other user, attackers quickly took their hands on generative AI tools. Whereas the implications are still limited in terms of the complexity of the value these tools provide, nonetheless, it is increasingly facilitating attackers’ work. The best example is phishing emails. Still in the top 2 attack vector, their writing, quality, and creation speed have dramatically improved using generative AI tools.

Some have tried and succeeded in creating malware lacking intrinsic human value-added tactics to keep this code hidden as long as possible. Code obfuscation, which is all about converting simple source code into a program that does the same thing but is more difficult to read and understand for defenders, for instance, is something you will have a hard time making ChatGPT do in an advanced way (It can, however, help you de-obfuscating lines of code, muahaha!).

There is also the risk of relying on generative AI models that are not “explainable.” Explainability will help analysts understand the reasoning behind the model’s predictions or generated samples, enabling them to trust and act upon the insights provided by the AI.

The risk of having generative AI models without “explainability” would be to create a gap between cybersecurity professionals and AI models that make decisions based on sometimes weak correlation links. Moreover, relying on a “non-explainable” AI would promote the outsourcing of skills to a third-party tool and, therefore, in the end, a loss of control over cybersecurity and the risk of uncontrolled decision-making errors or even a loss of visibility over one’s cybersecurity architecture.

4. Automation platforms to interconnect generative AI tools with your stack

Promote interoperability of tools through exhaustive integration capabilities.

To make the most of AI and ML tools and technologies, organizations will need automation platforms that promote the interoperability of tools. Such platforms should offer exhaustive integration capabilities, enabling seamless communication and data sharing between generative AI solutions and existing systems. By facilitating smooth integration, organizations can create a more cohesive and efficient security infrastructure, allowing them to leverage the full potential of AI and ML technologies in their cybersecurity operations.

More, doing so helps every company take its share of the benefits of generative AI. One of the risks being such tools be monopolized by the wealthiest organizations that can afford them, the ability to provide integrations to a whole ecosystem enables any organization to have and keep access to generative AI tools.

Take the full benefits of AI by automating the remaining incident response tasks and staying in control.

Taking full advantage of AI in incident response requires the implementation of an automation platform that can interconnect generative AI tools with other cybersecurity solutions, effectively streamlining the entire remediation process.

Integrating generative AI tools into the incident response process helps organizations to quickly analyze security incidents, identify threats, and prioritize their response. Automating remediation tasks can significantly reduce the time to mitigate security incidents, minimizing potential damage.

Generative AI will also help identify complex patterns and anomalies that may be difficult for human analysts to detect, next-next-behavioral analysis! This will help reduce the likelihood of false positives and other errors.

Also, by incorporating user-defined checks and balances during remediation, organizations can maintain control and oversight over automated actions and generative AI outputs. This can include requiring human approval for specific remediation steps, implementing detailed logging and audit trails, and creating real-time alerts to notify security personnel of critical events.

Useful combinations could lie in integrating generative AI models with existing security solutions, such as SIEM, EDR, and Orchestration and Automation platforms, enabling seamless data exchange and analysis.

5. Conclusion about generative AI in cybersecurity

While AI and security integration may not be a silver bullet for all of the cybersecurity’s pain points, it can significantly improve security operations for organizations. With advancements in AI technologies such as generative AI and large language models, the possibilities for AI to enhance security teams’ effectiveness are expanding. By leveraging AI to automate mundane tasks, streamline communications, and prioritize threats, organizations can better defend against the evolving cybersecurity landscape. Implementing automation platforms to interconnect AI with existing infrastructure will be a key factor in realizing the full potential of AI in cybersecurity.